1 Using Stan in R

1.1 Why Stan?

SAMs cannot be straightforwardly implemented with currently available software for quantitative genetic analysis, such as the frequentist ASREML program (Butler et al. 2018) or the Bayesian open-source R package MCMCglmm (Hadfield 2010). The classical animal models estimated by these programs can be used to describe reaction norms defined over non-social environments, with reaction norm slopes estimated on directly measured environmental gradients. However, social environments defined by partner phenotypes present novel challenges for animal models, such as accounting for temporal feedback between social partners’ phenotypes, differentiating the effects of assortment and social plasticity between partners, and avoiding bias due to correlated residual effects on measurements taken within and among social interactions (Martin and Jaeggi 2021). SAMs address these challenges by estimating plasticity, assortment, and selection directly on the latent social reaction norms (SRNs) governing repeatable individual variation. A highly flexible modeling framework is required to estimate these latent (i.e. indirectly measured) interactions with raw empirical data, as well as to use them for predicting social evolutionary change.

Stan (Carpenter et al. 2017) is an open-source programming language for estimating probabilistic models of arbitrary complexity, which can interface with multiple statistical environments such as R (R Core Team 2020). Stan also facilitates fully Bayesian inference using state-of-the-art Markov Chain Monte Carlo (MCMC) sampling techniques. In particular, the No U-Turn Sampler (NUTS) implimented in Stan has been found to perform particularly well for quantitative genetic analysis (Nishio and Arakawa 2019). Stan is thus an ideal platform for flexibly estimating SAMs in any empirical system, as is further discussed in the main text (Martin and Jaeggi 2021). Using Bayesian posteriors rather than point estimates will also promote more robust biological inferences with SAMs, as statistical uncertainty can be easily carried forward across multiple stages of analysis (Stinchcombe, Simonsen, and Blows 2014). This provides a crucial means of quantifying uncertainty in the predicted direction and magnitude of social evolution.

1.2 Getting Started

Stan interfaces with R through the RStan package (Carpenter et al. 2017), providing an efficient means of integrating SAMs into pre-existing data analysis pipelines. However, you will first need to install Stan on your computer and ensure that it is appropriately configured with your C++ toolchain. This can be accomplished by following the instructions for your operating system on the RStan Getting Started page. Once you are able to effectively use RStan, you can begin creating the .stan files necessary for estimating SAMs. These files can be composed using RStudio or any text editor, as well as directly in R with write()

write("// for Stan comments

functions{...} // Stan models are composed of

data {...} // multiple programming blocks

transformed data {...} //only data, parameters, and model

parameters {...} //blocks are necessary

transformed parameters {...}

model {...}

generated quantities {...} ",

"mod1.stan")Once an appropriate .stan file is prepared, it can be compiled in R for the C++ toolchain using the stan_model() function and subsequently estimated with an appropriate list of empirical data using the sampling() function. The resulting posteriors of a model can then be accessed with the extract() function and manipulated for any further quantities or analyses of interest.

#load package

library(rstan)

#compiles the model in C++ for MCMC estimation

mod1 = stan_model("mod1.stan")

#samples posterior distribution of the model with default MCMC settings

results = sampling(object = mod1, data = data)

#extracts posterior estimates

samples = extract(results)1.3 Bayesian inference

A detailed overview of the benefits of Bayesian inference is beyond the scope of this guidebook, as attention is placed on coding and computational concerns rather than interpretation. We encourage researchers unfamiliar with fully Bayesian inference to see McElreath (2020) for further discussion. Lemoine (2019) also demonstrates why weakly regularizing (or “weakly informative”) priors are often preferable to the flat or diffuse priors more commonly used in evolutionary ecology. In general, we encourage researchers to utilize the benefits of fully Bayesian inference while working in Stan, rather than attempting to mimic classical inference and null-hypothesis testing approaches. Gelman et al. (2020) provide a very useful general discussion of Bayesian workflow from initial estimation to model comparison and selection. A basic understanding of MCMC and prior and posterior distributions is necessary to fully understand model estimation in Stan. MCMC provides a means of approximating any continous probability distribution, with a finite set of samples taken in proportion to the underlying target probability density. As a consequence, Stan models return objects with many MCMC samples for each model parameter, rather than single point estimates. These samples can then be summarized to approximate the shape of the truly continous target posterior distribution, as is shown throughout the coding tutorials.

1.4 Basic coding tutorial

Stan uses its own language for writing probabilistic models, including a variety of built-in functions designed to aid in efficient computation. The biggest conceptual hurdle for new users of Stan is likely to be the absence of an intuitive R-like syntax for specifying model formulas, such as formulas like y ~ x + (1|z) that can be used to quickly specify complex generalized linear mixed-effects models. These formulas facilitate highly efficient statistical modeling, but do so at the cost of limiting users’ ability to specify atypical model structures. Instead, Stan provides the benefit of nearly unlimited flexibility in model specification, with the added cost of a steeper learning curve. In particular, models must be formally specified with mathematically appropriate likelihood functions, rather than this process being handled on the back-end through textual inputs from the user such as family= poisson(link = "log"). This may at first seem like a cumbersome task, but it provides a degree of independence and creativity for data analysis that is otherwise unavailable. It is this autonomy that makes it possible to unbiasedly estimate SAMs in Stan, which to the best of our knowledge cannot be accomplished with any other mainstream statistical software. Nonetheless, it is important to recognize that some practice and trial-and-error will be required to gain competency and comfortability with Stan. We therefore encourage those interested in SAMs to review the Stan Reference Manual, as well the extensive collection of Stan Case Studies, which will provide a more robust foundation for estimating any model of interest in Stan.

Here we review some basics of Stan that will be necessary for following the coding tutorials in the rest of the guidebook. To make this introduction more concrete, we simulate a simple data structure appropriately described by a Gaussian random regression model, with 50 subjects and 2 repeated measures per subject across an environmental gradient \(x\). Formally, the model for observation i of individual j is given by

\[z_{ij}=\mu_0+\mu_{j}+\left( \beta_0 + \beta_{j} \right) x_{ij}+\epsilon_i\] \[ \begin{bmatrix} \boldsymbol{\mu} \\ \boldsymbol{\beta_{\mathrm{ }}} \end{bmatrix} \sim \mathrm{MVNormal}(\boldsymbol{0}, \boldsymbol{\mathrm{P}} ) : \boldsymbol{\mathrm{ }} = \begin{bmatrix} \mathrm{Var}( {\mu} ) & \mathrm{Cov}( {\mu}, \boldsymbol{\beta_{\mathrm{ }}}) \\ \mathrm{Cov}(\boldsymbol{\beta_{\mathrm{ }}}, {\mu} ) & \mathrm{Var}( \boldsymbol{\beta_{\mathrm{ }}} ) \end{bmatrix} \]

\[ \boldsymbol{\epsilon} \sim \mathrm{Normal}(0, \boldsymbol{\Sigma} ): \boldsymbol{\Sigma} = [\mathrm{Var}(\boldsymbol{\epsilon})]\]

where \(\mu_0\) and \(\beta_1\) are fixed population-level intercepts and slopes respectively, with the vectors \(\boldsymbol{\mu_{\mathrm{ }}}\) and \(\boldsymbol{\beta_{\mathrm{ }}}\) containing individual-specific phenotypic deviations from the population values (i.e. random intercepts and slopes). The probability density function of this Gaussian variable can be equivalently written as

\[z_{ij} \sim \mathrm{Normal}(\mu_0 + \mu_{j}+ \left( \beta_1 + \beta_{j} \right) x_{ij}, \boldsymbol{\Sigma})\] It is often easier to specify model likelihoods and priors over standard deviations and correlation matrices in Stan, rather than the variances and covariances represented in the formal model. These parameters can always be derived from one another with simple transformations. For variances and standard deviations

\[\mathrm{SD}( \boldsymbol{\mu_{\mathrm{ }}} ) = \mathrm{Sqrt(Var} (\boldsymbol{\boldsymbol{\mu_{\mathrm{ }}} }) ) , \quad \mathrm{SD}( \boldsymbol{\beta_{\mathrm{ }}} ) = \mathrm{Sqrt(Var} (\boldsymbol{\boldsymbol{\beta_{\mathrm{ }}} }) )\]

Similarly, the covariance matrix \(\boldsymbol{\mathrm{P_{cov}}}\) can be derived by pre- and post-multiplying the correlation matrix \(\boldsymbol{\mathrm{P_{cor}}}\) with diagonal matrices \(\boldsymbol{\mathrm{P_{sd}}}\) of these standard deviations

\[\boldsymbol{\mathrm{P_{cov}}} = \boldsymbol{\mathrm{P_{sd}}} \boldsymbol{\mathrm{P_{cor}}}\boldsymbol{\mathrm{P_{sd}}}\]

\[\boldsymbol{\mathrm{P_{sd}}}= \begin{bmatrix} \mathrm{SD}( \boldsymbol{\mu_{\mathrm{ }}} ) & 0 \\

0 & \mathrm{SD}( \boldsymbol{\beta_{\mathrm{ }}} ) \end{bmatrix}, \quad

\boldsymbol{\mathrm{P_{cor}}} = \begin{bmatrix} 1 & \mathrm{Cor}( \boldsymbol{\mu_{\mathrm{ }}}, \boldsymbol{\beta_{\mathrm{ }}} ) \\

\mathrm{Cor}( \boldsymbol{\beta_{\mathrm{ }}} , \boldsymbol{\mu_{\mathrm{ }}} ) & 1

\end{bmatrix} \]

We can simulate a random dataset from this model in R, along with an index variable id that tracks which individual (I = 1 - 50) is being measured at each observation (N = 1-100).

library(mvtnorm)

N = 100 #total observations

I = 50 #total individuals

intercept = 1 #global intercept

beta1 = 0.3 #fixed effect regression coefficient

SD_intercept = 0.3 #standard deviation of random intercepts

SD_slope = 0.3

SD_residual = 1

cor_RE = 0.3 #correlation of random intercepts and slopes

#individual-level index

id = rep(seq(1, I), each = N/I) #i.e. two observations per individual

#simulate fixed effect covariate

x = rnorm(100,0,1)

#simulate random individual deviations

Pcor = matrix( c(1, cor_RE, cor_RE, 1), nrow = 2, ncol = 2 )

Psd = matrix( c(SD_intercept, 0, 0, SD_slope), nrow = 2, ncol = 2 )

Pcov = Psd %*% Pcor %*% Psd

re_P = rmvnorm(I, mean = c(0,0), sigma = Pcov) #rows = I, cols = intercepts and slopes

#individual-level parameters

mu = re_P[,1]

beta = re_P[,2]

#residual effects

epsilon = rnorm(100, 0, SD_residual )

#measured response (100 response values for 50 subjects)

z = intercept + mu[id] + (beta1 + beta[id])*x + epsilon

#combine into list for Stan

#other values are empirically unobserved and will be model parameters

stan_data = list(z = z, x = x, id = id, N = N, I = I)We can now program a Stan model to infer the data-generating process with these empirical observations. For any .stan file composed with a text editor, the following programming blocks will be recognized and all model code inside each block will be processed sequentially.

functions {

}

data {

}

transformed data {

}

parameters {

}

transformed parameters {

}

model {

}

generated quantities {

}

The data, parameters, and model blocks are specified for any model, while the other blocks provide optional declarations and statements. In most statistical software, empirical data are input with a single matrix or dataframe. Rather than inputting a single dataframe or matix to RStan, a list can be provided with data for each scalar (real or integer), vector, or matrix declared in the .stan file. The names of these data objects are declared along with their expected dimensions, which ensures that inappropriate data structures or likelihood functions will throw errors. For the simulated data, we first declare all the measured variables and indices relevant to model estimation. We use // rather than # for comments in Stan.

data {

int<lower=1> N; //length of response vector/total observations

int<lower=1> I; //number of individuals

int<lower=1> id[N]; //N integer indices matching observations of z to the individual identity

vector[N] x; //vector of covariate values for fixed effect

vector[N] z; //vector of response values

}This declarative approach requires that particular attention is given to the order of data input to the model, as values will need to be appropriately aligned and indexed throughout the model specification. However, it also provides additional benefits such as facilitating multi-response models with heterogeneous dimensions, as well as allowing for arbitrarily complex forms of social interaction to be specified in the model likelihood using appropriate indices of the relevant vectors or matrices.

We specify model parameters in accordance with the formal model used for the simulation, with standard deviations and correlation matrices replacing variances and covariance matrices. For simplicity, we use _P to indicate phenotypic (co)variances and values in the Stan code, with _R used to indicate residual (co)variance terms.

parameters {

//fixed effects

real mu_0; //global intercept

real beta_1; //fixed effect coefficient for covariate x

//random effects

corr_matrix[2] Pcor; //correlation matrix of random effects

vector<lower=0>[2] sd_P; //standard deviations of random effects

real<lower=0> sd_R; //standard deviation of residuals

matrix[I,2] re_P; //individual-level phenotypic deviations (random intercepts and slopes)

}Note that rather than declaring the random effects as separate vectors, we instead declare a matrix for both individual intercept and slope values, which we use in the model block for declaring the covariance between these parameters. It is necessary to specify <lower=0> so that the standard deviation parameters are lower bound at zero.

The other parameters in the formal model are simply combinations of these fundamental parameters. The transformed parameters block of a .stan file is intended for such purposes. In particular, the covariance matrix \(\boldsymbol{\mathrm{P_{cov}}}\) can be derived with the standard deviations sd_P and the correlation matrix Pcor as shown above. Separating these parameters is useful for increasing model clarity, as well for enhancing the efficiency of MCMC sampling as demonstrated further below.

transformed parameters {

cov_matrix[2] Pcov = diag_matrix(sd_P) * Pcor * diag_matrix(sd_P); //cov of random effects

}This new transformed parameter P can now be used in the model block to more clearly express the likelihood function. Note that new objects can also be declared inside the model block prior to specifying the likelihood. However, any objects created in the model block are temporary and will not be saved along with the MCMC samples of objects declared in the parameters and transformed parametrs blocks. This can be useful for creating pragmatic objects that enable more efficient coding but do not need to be directly interpreted. For instance, rather than subsetting the matrix of individual random effects re_P inside the model likelihood, we can instead create two temporary vectors mu and beta to more intuitively write the likelihood function. Following the formal model above, we specify the response \(z_{ij}\) as a function of the linear predictor containing population parameters as well as individual intercepts \(\mu_j\) and slopes in response to the environmental covariate \(\beta_j\), as well as stochastic effects with standard deviation \(\mathrm{SD(\boldsymbol{\epsilon})}=\)sd_R. The random effects are sampled from a zero-centered multivariate normal with covariance matrix \(\boldsymbol{\mathrm{P_{cov}}}\).

model {

vector[I] mu = col(re_P, 1); //temporary individual-level intercepts

vector[I] beta = col(re_P, 2); //temporary individual-level slopes

//model likelihood

//use index id to match response vector length

z ~ normal(mu_0 + mu[id] + (beta_1 + beta[id]).*x, sd_R);

for(i in 1:I) //each individual's random effects ~ MVN(0,P_cov)

re_P[i] ~ multi_normal([0,0], Pcov);

//priors

//fixed effects

mu_0 ~ normal(0,1);

beta_1 ~ normal(0,1);

//random effects

Pcor ~ lkj_corr(2);

to_vector(sd_P) ~ cauchy(0,1);

sd_R ~ cauchy(0,1);

}

Model priors are set for all parameters declared in the original programming block, while transformed parameters do not receive priors. We use general purpose, weakly regularizing priors to reduce the risk of inferential bias and enhance model identification, which will be crucial for SAMs relying on interactions among many latent variables. Interested readers should see Lemoine (2019) and McElreath (2020) for further discussion on the choice of model priors, as well as the clear limitations of using highly diffuse, flat, and/or improper priors that are more commonly utilized. Finally, rather than post-processing the posterior SDs ourselves to derive variances, we can instead use the generated quantities block to calculate the variances during model estimation.

generated quantities {

vector[2] V_P = sd_P .* sd_P; //RN intercept [1] and slope [2] variance

real V_R = sd_R * sd_R; //residual variance (=Sigma matrix)

}The posterior object returned from this model will now contain the random effects variances and covariance matrix, along with the SDs and correlation matrix. Each of the blocks can now be saved together in a single .stan file, which can be accomplished with a text editor or inside R.

write("

data {

int<lower=1> N; //length of response vector/total observations

int<lower=1> I; //number of individuals

int<lower=1> id[N]; //N integer indices matching observations of z to the individual identity

vector[N] x; //vector of covariate values for fixed effect

vector[N] z; //vector of response values

}

parameters {

//fixed effects

real mu_0; //global intercept

real beta_1; //fixed effect coefficient for covariate x

//random effects

corr_matrix[2] Pcor; //correlation matrix of random effects

vector<lower=0>[2] sd_P; //standard deviations of random effects

real<lower=0> sd_R; //standard deviation of residuals

matrix[I,2] re_P; //individual-level phenotypic deviations (random intercepts and slopes)

}

transformed parameters {

cov_matrix[2] Pcov = diag_matrix(sd_P) * Pcor * diag_matrix(sd_P); //cov of random effects

}

model {

vector[I] mu = col(re_P, 1); //temporary individual-level intercepts

vector[I] beta = col(re_P, 2); //temporary individual-level slopes

//model likelihood

//use index id to match response vector length

z ~ normal(mu_0 + mu[id] + (beta_1 + beta[id]).*x, sd_R);

for(i in 1:I) //each individual's random effects ~ MVN(0,P_cov)

re_P[i] ~ multi_normal([0,0], Pcov);

//priors

//fixed effects

mu_0 ~ normal(0,1);

beta_1 ~ normal(0,1);

//random effects

Pcor ~ lkj_corr(2);

to_vector(sd_P) ~ cauchy(0,1);

sd_R ~ cauchy(0,1);

}

generated quantities {

vector[2] V_P = sd_P .* sd_P; //RN intercept [1] and slope [2] variance

real V_R = sd_R * sd_R; //residual variance (=Sigma matrix)

}", "mod1.stan")The model is now ready for estimation. We manually specify that the MCMC sampler should use 1500 iterations per chain to converge on the target joint posterior distribution warmup=1500, with the subsequent 1000 iterations used as posterior samples iter = 2500 (i.e. iter - warmup = number of MCMC samples per chain). init = 0 initializes the samplers near null values. Four MCMC chains are used to assess model convergence across independent random samplers chains=4, with one core assigned to each chain for parallel processing cores=4. The adapt_delta=0.90 argument reduces the risk of divergent transitions during sampling.

library(rstan)## Loading required package: StanHeaders## Loading required package: ggplot2## RStudio Community is a great place to get help: https://community.rstudio.com/c/tidyverse## rstan (Version 2.21.2, GitRev: 2e1f913d3ca3)## For execution on a local, multicore CPU with excess RAM we recommend calling

## options(mc.cores = parallel::detectCores()).

## To avoid recompilation of unchanged Stan programs, we recommend calling

## rstan_options(auto_write = TRUE)## Do not specify '-march=native' in 'LOCAL_CPPFLAGS' or a Makevars filemod1 = stan_model("mod1.stan")## Warning in system(paste(CXX, ARGS), ignore.stdout = TRUE, ignore.stderr = TRUE): 'C:/rtools40/usr/mingw_/bin/g++' not

## found## hash mismatch so recompiling; make sure Stan code ends with a blank linestan_results <- sampling(mod1, data=stan_data, init = 0, warmup=1500, iter = 2500,

chains=4, cores=4, control=list(adapt_delta=0.90) )## Warning: There were 8 divergent transitions after warmup. See

## http://mc-stan.org/misc/warnings.html#divergent-transitions-after-warmup

## to find out why this is a problem and how to eliminate them.## Warning: There were 4 chains where the estimated Bayesian Fraction of Missing Information was low. See

## http://mc-stan.org/misc/warnings.html#bfmi-low## Warning: Examine the pairs() plot to diagnose sampling problems## Warning: The largest R-hat is NA, indicating chains have not mixed.

## Running the chains for more iterations may help. See

## http://mc-stan.org/misc/warnings.html#r-hat## Warning: Bulk Effective Samples Size (ESS) is too low, indicating posterior means and medians may be unreliable.

## Running the chains for more iterations may help. See

## http://mc-stan.org/misc/warnings.html#bulk-ess## Warning: Tail Effective Samples Size (ESS) is too low, indicating posterior variances and tail quantiles may be unreliable.

## Running the chains for more iterations may help. See

## http://mc-stan.org/misc/warnings.html#tail-essStan flags a few potential issues with the MCMC sampler. Note that the warning ## Warning in system(paste(CXX, ARGS), ignore.stdout = TRUE, ignore.stderr = TRUE): 'C:/rtools40/usr/mingw_/bin/g++' not found during compilation of the model will sometimes appear on Windows computers and can be safely ignored. Further description of the sampler warnings can be found in the Stan Warning Guide. One warning is that “The largest R-hat is NA, indicating chains have not mixed.” Stan does not know whether some parameter values are fixed (causing Rhat = NA) because the sampler is stuck, or because the model has been intentionally specified with fixed parameter values (e.g. diagonals fixed to 1 in a correlation matrix or an intercept forced to 0). For the specified model, this is a harmless warning that can be safely ignored. However, we can also check for issues by looking at the Rhat values of all model parameters using summary() on the saved results. If an expected parameter is missing from the table or shows NA, this likely indicates an unintentional error in the model code.

summary(stan_results)$summary[,"Rhat"]## mu_0 beta_1 Pcor[1,1] Pcor[1,2] Pcor[2,1] Pcor[2,2] sd_P[1] sd_P[2] sd_R re_P[1,1]

## 0.9997521 1.0003572 NaN 1.0090841 1.0090841 0.9989995 1.0601371 1.0242973 1.0108377 1.0211173

## re_P[1,2] re_P[2,1] re_P[2,2] re_P[3,1] re_P[3,2] re_P[4,1] re_P[4,2] re_P[5,1] re_P[5,2] re_P[6,1]

## 1.0032851 1.0019569 1.0012126 0.9993447 0.9997130 0.9995471 0.9995881 1.0040075 1.0071919 1.0030085

## re_P[6,2] re_P[7,1] re_P[7,2] re_P[8,1] re_P[8,2] re_P[9,1] re_P[9,2] re_P[10,1] re_P[10,2] re_P[11,1]

## 1.0012824 1.0020910 1.0000014 1.0352974 1.0100975 1.0046201 1.0017715 1.0032624 1.0014731 1.0009535

## re_P[11,2] re_P[12,1] re_P[12,2] re_P[13,1] re_P[13,2] re_P[14,1] re_P[14,2] re_P[15,1] re_P[15,2] re_P[16,1]

## 1.0018118 0.9997637 0.9996327 0.9999519 1.0005573 1.0012118 1.0001971 0.9995699 0.9995915 1.0002669

## re_P[16,2] re_P[17,1] re_P[17,2] re_P[18,1] re_P[18,2] re_P[19,1] re_P[19,2] re_P[20,1] re_P[20,2] re_P[21,1]

## 0.9993361 1.0003014 0.9996823 1.0121158 1.0072494 1.0017259 0.9996514 1.0074519 1.0011461 1.0015644

## re_P[21,2] re_P[22,1] re_P[22,2] re_P[23,1] re_P[23,2] re_P[24,1] re_P[24,2] re_P[25,1] re_P[25,2] re_P[26,1]

## 1.0031717 1.0232036 1.0062477 1.0014611 1.0010892 1.0048734 1.0002254 0.9998225 1.0044160 0.9995626

## re_P[26,2] re_P[27,1] re_P[27,2] re_P[28,1] re_P[28,2] re_P[29,1] re_P[29,2] re_P[30,1] re_P[30,2] re_P[31,1]

## 0.9995252 1.0146794 1.0034305 1.0258276 1.0029981 1.0007896 1.0004616 1.0090475 1.0000194 1.0052447

## re_P[31,2] re_P[32,1] re_P[32,2] re_P[33,1] re_P[33,2] re_P[34,1] re_P[34,2] re_P[35,1] re_P[35,2] re_P[36,1]

## 0.9997576 1.0018832 1.0023457 1.0049056 1.0011138 1.0165766 1.0036821 1.0007315 1.0008874 1.0085257

## re_P[36,2] re_P[37,1] re_P[37,2] re_P[38,1] re_P[38,2] re_P[39,1] re_P[39,2] re_P[40,1] re_P[40,2] re_P[41,1]

## 1.0086161 0.9998389 1.0000610 1.0063159 1.0009746 1.0007595 1.0010491 1.0022543 1.0024942 1.0043573

## re_P[41,2] re_P[42,1] re_P[42,2] re_P[43,1] re_P[43,2] re_P[44,1] re_P[44,2] re_P[45,1] re_P[45,2] re_P[46,1]

## 1.0011428 1.0005166 1.0000737 1.0040573 0.9994275 1.0006120 1.0000841 1.0009916 1.0004643 1.0059609

## re_P[46,2] re_P[47,1] re_P[47,2] re_P[48,1] re_P[48,2] re_P[49,1] re_P[49,2] re_P[50,1] re_P[50,2] Pcov[1,1]

## 1.0014573 1.0011021 1.0024054 1.0038231 1.0065090 1.0106748 1.0015656 1.0036409 1.0015379 1.0428954

## Pcov[1,2] Pcov[2,1] Pcov[2,2] V_P[1] V_P[2] V_R lp__

## 1.0113718 1.0113718 1.0155160 1.0428954 1.0155160 1.0107378 1.0411590In addition to the Rhat warning, the effective sample sizes of some model parameters are too low to ensure accurate inferences. It is helpful to see which parameters are causing these warnings by sorting on the lowest n_eff values in the summary table.

sort(summary(stan_results)$summary[,"n_eff"])## lp__ sd_P[1] Pcov[1,1] V_P[1] sd_P[2] re_P[8,1] re_P[28,1] Pcov[2,2] V_P[2] re_P[22,1]

## 46.05295 66.51469 105.12070 105.12070 123.24947 127.81135 168.44406 183.86975 183.86975 212.68495

## re_P[1,1] re_P[27,1] sd_R re_P[8,2] V_R Pcov[1,2] Pcov[2,1] re_P[34,1] re_P[5,2] re_P[48,2]

## 227.37010 259.26699 259.68094 259.92900 261.70946 319.02414 319.02414 354.30794 374.28899 394.02385

## re_P[18,2] re_P[22,2] re_P[1,2] re_P[36,2] re_P[18,1] re_P[30,1] Pcor[1,2] Pcor[2,1] re_P[49,1] re_P[34,2]

## 429.14276 491.00569 502.03028 505.43609 527.49644 542.90754 562.69263 562.69263 583.25909 624.82178

## re_P[25,2] re_P[49,2] re_P[38,1] re_P[36,1] re_P[46,1] re_P[27,2] re_P[40,2] re_P[20,1] re_P[9,2] re_P[9,1]

## 657.82558 705.66463 747.13931 748.75629 879.15171 897.20123 942.37240 961.81375 975.37203 1033.91389

## re_P[48,1] re_P[32,2] re_P[47,2] re_P[6,1] re_P[31,1] re_P[33,1] re_P[13,2] re_P[41,1] re_P[50,1] re_P[10,2]

## 1125.39432 1193.56016 1203.43297 1303.35406 1332.60664 1502.64438 1520.26521 1606.99389 1634.19314 1706.25973

## re_P[20,2] re_P[24,1] re_P[14,1] re_P[39,2] re_P[5,1] re_P[28,2] re_P[11,2] re_P[50,2] re_P[43,1] re_P[2,1]

## 1845.76288 1848.84272 1910.50599 1941.13094 1980.69289 2081.72909 2249.38593 2253.39030 2354.32817 2371.27570

## re_P[35,2] re_P[46,2] re_P[47,1] beta_1 re_P[24,2] re_P[21,2] re_P[32,1] re_P[10,1] re_P[21,1] re_P[23,2]

## 2435.53421 2454.91948 2507.25294 2566.48970 2615.36808 2645.70509 2689.46498 2776.62970 2810.26437 2922.19129

## re_P[15,2] mu_0 re_P[11,1] re_P[14,2] re_P[6,2] re_P[40,1] re_P[25,1] re_P[2,2] re_P[39,1] re_P[45,1]

## 3026.64804 3034.68833 3194.72613 3225.54043 3230.70362 3356.79674 3421.19722 3564.61139 3595.50398 3676.20347

## re_P[38,2] re_P[7,1] re_P[23,1] re_P[42,2] re_P[17,1] re_P[45,2] Pcor[2,2] re_P[41,2] re_P[35,1] re_P[43,2]

## 3768.42217 3818.59771 3828.04436 3848.32887 3899.35804 3981.60850 4012.07251 4034.73310 4041.93982 4093.01264

## re_P[30,2] re_P[13,1] re_P[33,2] re_P[31,2] re_P[29,1] re_P[37,1] re_P[7,2] re_P[37,2] re_P[19,1] re_P[42,1]

## 4098.82666 4191.22420 4251.78536 4284.98255 4291.62320 4325.35687 4329.52403 4330.81929 4357.06426 4380.59650

## re_P[44,1] re_P[15,1] re_P[16,1] re_P[16,2] re_P[19,2] re_P[3,1] re_P[17,2] re_P[29,2] re_P[26,1] re_P[12,1]

## 4405.31693 4500.91337 4501.99953 4561.91162 4584.78054 4635.60110 4637.37557 4681.11304 4844.45483 4858.58473

## re_P[26,2] re_P[4,1] re_P[44,2] re_P[4,2] re_P[3,2] re_P[12,2]

## 4880.19699 5040.04712 5224.73609 5321.05501 5472.03335 5660.40394It is typical that individual-specific trait values in re_P have relatively lower effective sample sizes than the population-level parameters of primary interest. More damningly, however, we also see an extremely low effective sample for lp__, which is the joint log density of the model (up to a constant internally defined scale factor). This provides further evidence that the model, as currently defined, is poorly identified. The key random effect SDs sd_P and variances V_P are also very poorly sampled, along with the residual SD sd_R and variance V_R. We could run the MCMC sampler for more iterations, increase the warm-up period, and change various other manual control settings. However, the deeper issue here is not that the model is formally mispecified but rather that we have inefficiently parametrized the model for sampling.

1.4.1 Cholesky decompositions

Although the .stan file appropriately represents the formal model, it is programmed in such a way that the MCMC sampler has troubling sampling from the joint posterior distribution of the model. One of the first things we can do to increase efficiency is to reduce redundant computation over matrices in our model. This can be done with Cholesky decompositions. For any positive definite matrix \(\boldsymbol{\Omega}\), a Cholesky decomposition can be defined such that

\[\boldsymbol{\Omega} = \boldsymbol{\mathrm{L}_{\Omega}} \boldsymbol{\mathrm{L}_{\Omega}}^{\mathrm{T}}\] where \(\boldsymbol{\mathrm{L}_{\Omega}}\) is a lower-triangular matrix and \(^{\mathrm{T}}\) indicates matrix transposition. This property means that we can always do computations of reduced dimensionality on the lower-triangular matrix \(\boldsymbol{\mathrm{L}_{\Omega}}\) and subsequently recover the full positive-definitive matrix \(\boldsymbol{\Omega}\) by post-multiplying \(\boldsymbol{\mathrm{L}_{\Omega}}\) with its transpose.

Stan provides many built-in functions for easily defining and manipulating Cholesky decomposed matrices, which we can use to reparametrize the .stan file. Comments are added below where Cholesky decompositions have been introduced.

data {

int<lower=1> N;

int<lower=1> I;

int<lower=1> id[N];

vector[N] x;

vector[N] z;

}

parameters {

real mu_0;

real beta_1;

cholesky_factor_corr[2] LPcor; //lower tri Cholesky of random effect cor matrix

vector<lower=0>[2] sd_P;

real<lower=0> sd_R;

matrix[I,2] re_P;

}

transformed parameters {

cholesky_factor_cov[2] LPcov = diag_pre_multiply(sd_P, LPcor); //Cholesky of random effect cov

}

model {

vector[I] mu = col(re_P, 1);

vector[I] beta = col(re_P, 2);

z ~ normal(mu_0 + mu[id] + (beta_1 + beta[id]).*x, sd_R);

for(i in 1:I)

re_P[i] ~ multi_normal_cholesky([0,0], LPcov); //likelihood expecting Cholesky cov

mu_0 ~ normal(0,1);

beta_1 ~ normal(0,1);

LPcor ~ lkj_corr_cholesky(2); //prior for Cholesky matrix

to_vector(sd_P) ~ cauchy(0,1);

sd_R ~ cauchy(0,1);

}

generated quantities {

vector[2] V_P = sd_P .* sd_P;

real V_R = sd_R * sd_R;

corr_matrix[2] Pcor = LPcor*LPcor' ; //multiply by transpose to get full cor matrix

cov_matrix[2] Pcov = diag_matrix(V_P) * Pcor * diag_matrix(V_P); //full cov matrix

The full covariance and correlation matrices are now specified in the generated quantities block.

1.4.2 Non-centered random effects

Before running this model, we can also reparametrize the random effects to further enhance efficiency. Currently, we express the unobserved random effects in re_P as being generated from a distribution with unobserved lower Cholesky covariance matrix LPcov. While mathematically appropriate, this specification can make it difficult for the model to identify the scale of the random effects. An alternative but mathematically equivalent parametrization can be used to separate out the scale of the random effect deviations from the population-level (co)variances, which often will enhance model identification. Note that any normally distributed random variable \(\boldsymbol{z}\) where

\[\boldsymbol{z} \sim \mathrm{Normal}(0,\sigma_z)\] can also be expressed as a standard normal variable \(z_{std}\) scaled by the original SD \[\boldsymbol{z} \equiv \boldsymbol{z_{\mathrm{std}}}\sigma_z\] \[\boldsymbol{z_{\mathrm{std}}} \sim \mathrm{Normal}(0,1)\] Similarly for a n x p matrix \(\boldsymbol{Z}\) of p multivariate phenotypes with covariance matrix \(\boldsymbol{\mathrm{C}}\)

\[\boldsymbol{Z} \equiv \boldsymbol{Z_{\mathrm{std}}} \boldsymbol{\mathrm{L}_{\boldsymbol{\mathrm{C}}}}^{\mathrm{T}}\]

\[\mathrm{vec}(\boldsymbol{Z_{\mathrm{std}}}) \sim \mathrm{MVNormal}(\boldsymbol{0},\boldsymbol{\mathrm{I}})\]

where \(\boldsymbol{\mathrm{L}_{\boldsymbol{\mathrm{C}}}}\) is the lower-triangular Cholesky decomposition. Implementing this so-called “non-centered parametrization” is straightforward in Stan and can of course also be applied to correlation matrices. Building on the Cholesky decompositions added in the previous subsection, and using the ' symbol for the transpose function

write("

data {

int<lower=1> N;

int<lower=1> I;

int<lower=1> id[N];

vector[N] x;

vector[N] z;

}

parameters {

real mu_0;

real beta_1;

cholesky_factor_corr[2] LPcor;

vector<lower=0>[2] sd_P;

real<lower=0> sd_R;

matrix[I,2] std_P; //now matrix of standard normals (see priors below)

}

transformed parameters {

matrix[I,2] re_P = std_P * diag_pre_multiply(sd_P,LPcor)'; //non-centered parameterization

}

model {

vector[I] mu = col(re_P, 1);

vector[I] beta = col(re_P, 2);

z ~ normal(mu_0 + mu[id] + (beta_1 + beta[id]).*x, sd_R);

mu_0 ~ normal(0,1);

beta_1 ~ normal(0,1);

to_vector(std_P) ~ std_normal(); //new prior distribution over standard normal deviations

LPcor ~ lkj_corr_cholesky(2);

to_vector(sd_P) ~ cauchy(0,1);

sd_R ~ cauchy(0,1);

}

generated quantities {

vector[2] V_P = sd_P .* sd_P;

real V_R = sd_R * sd_R;

corr_matrix[2] Pcor = LPcor*LPcor' ;

cov_matrix[2] Pcov = diag_matrix(V_P) * Pcor * diag_matrix(V_P);

}", "mod1.stan")Note that the specification of the random effects has been greatly simplified with the non-centered parametrization. By separating out the scale of the deviations and the population-level (co)variances, it becomes unnecessary to directly specify the generative distribution of the full random effects as above. Instead, the full distribution is partitioned into three independent priors over the random effect standard normal deviations, SDs, and correlations, and the generative distribution of these values is specified directly through the scaling of the standard normals, i.e. re_P = std_P * diag_pre_multiply(sd_P,LP_cor)'=\(\boldsymbol{Z} \equiv \boldsymbol{Z_{\mathrm{std}}} \boldsymbol{\mathrm{L}_{\boldsymbol{\mathrm{C}}}}^{\mathrm{T}}\) above. This should make the model much easier to sample from.

library(rstan)

mod1 = stan_model("mod1.stan")## Warning in system(paste(CXX, ARGS), ignore.stdout = TRUE, ignore.stderr = TRUE): 'C:/rtools40/usr/mingw_/bin/g++' not

## found## recompiling to avoid crashing R sessionstan_results2 <- sampling(mod1, data=stan_data, init = 0, warmup=1500, iter = 2500,

chains=4, cores=4, control=list(adapt_delta=0.90) )

#extracts posterior estimates

MCMCsamples <- extract(stan_results2)The absence of warning messages indicates that our mathematically equivalent reparametrizations have enhanced the efficiency of the MCMC sampler. The posterior samples of the model can subsequently be extracted, summarized, visualized, and manipulated. E.g.

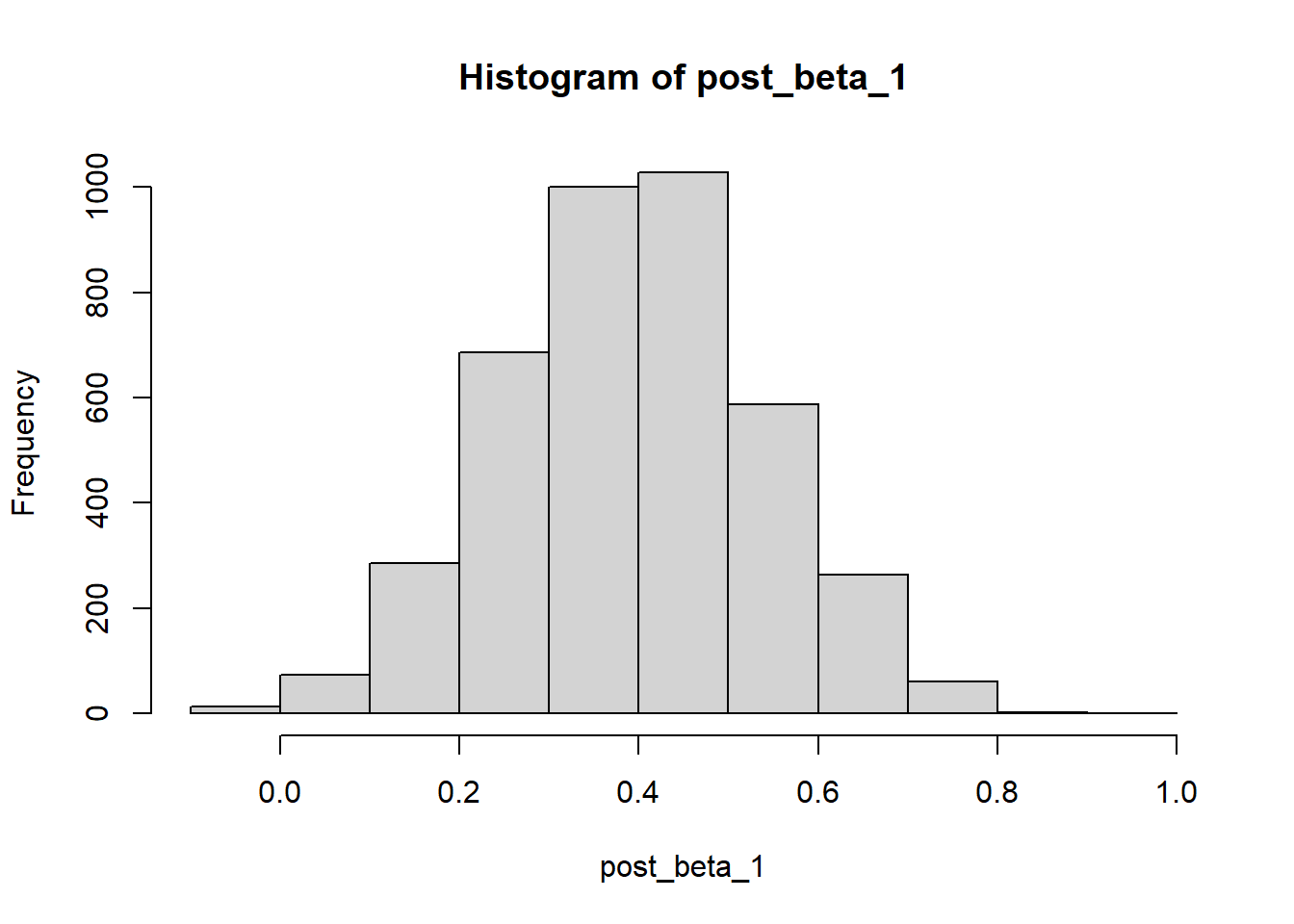

post_beta_1 = MCMCsamples$beta_1 #extract population-level slope

median(post_beta_1) #central tendency of posterior## [1] 0.3943837mad(post_beta_1) #dispersion around central tendency## [1] 0.1451042quantile(post_beta_1, c(0.05,0.95)) #90% credible interval## 5% 95%

## 0.1525127 0.6379658sum(post_beta_1 > 0)/length(post_beta_1) #posterior probability of + effect## [1] 0.99675hist(post_beta_1) #MCMC approximation of posterior distribution

We encourage the use of the shinystan R package for deeper inspection of model convergence and results with a GUI. In general, researchers should be skeptical of reporting results accompanied with sampler warnings and should seek to remove any diagnostic concerns prior to biological interpretation of the estimates.

1.5 Animal models

The model presented above assumes a single set of individual-specific intercepts and slopes, as defined by the mu and beta vectors in the .stan file. For quantitative genetic analysis with an animal model, these phenotypic effects can be further decomposed into distinct genetic and permanent environmental trait values. In particular, we expand the random phenotypic deviations so that

\[ \boldsymbol{\mu_{\mathrm{ }}} = \boldsymbol{\mu_{\mathrm{A}}} + \boldsymbol{\mu_{\mathrm{E}}}, \quad \boldsymbol{\beta_{\mathrm{ }}} = \boldsymbol{\beta_{\mathrm{A}}} + \boldsymbol{\beta_{\mathrm{E}}}\] \[ \begin{bmatrix} \boldsymbol{\mu_{\mathrm{A}}} \\ \boldsymbol{\beta_{\mathrm{A}}} \end{bmatrix} \sim \mathrm{MVNormal}(\boldsymbol{0}, \boldsymbol{\mathrm{G}} \otimes \boldsymbol{\mathrm{A}}) : \boldsymbol{\mathrm{G}} = \begin{bmatrix} \mathrm{Var}( \boldsymbol{\mu_{\mathrm{A}}} ) & \mathrm{Cov}( \boldsymbol{\mu_{\mathrm{A}}}, \boldsymbol{\beta_{\mathrm{A}}}) \\ \mathrm{Cov}(\boldsymbol{\beta_{\mathrm{A}}}, \boldsymbol{\mu_{\mathrm{A}}} ) & \mathrm{Var}( \boldsymbol{\beta_{\mathrm{A}}} ) \end{bmatrix} \]

\[ \begin{bmatrix} \boldsymbol{\mu_{\mathrm{E}}} \\ \boldsymbol{\beta_{\mathrm{E}}} \end{bmatrix} \sim \mathrm{MVNormal}(\boldsymbol{0}, \boldsymbol{\mathrm{E}} \otimes \boldsymbol{\mathrm{I}}) : \boldsymbol{\mathrm{E}} = \begin{bmatrix} \mathrm{Var}( \boldsymbol{\mu_{\mathrm{E}}} ) & \mathrm{Cov}( \boldsymbol{\mu_{\mathrm{E}}}, \boldsymbol{\beta_{\mathrm{E}}}) \\ \mathrm{Cov}(\boldsymbol{\beta_{\mathrm{E}}}, \boldsymbol{\mu_{\mathrm{E}}} ) & \mathrm{Var}( \boldsymbol{\beta_{\mathrm{E}}} ) \end{bmatrix} \]

where \(\boldsymbol{\mathrm{A}}\) is a positive-definite relatedness matrix derived from pedigree or molecular data. As we explain below, challenges arise when estimating such a model in Stan due to the difficulty of computing Kronecker products and the identification of genetic effects.

1.5.1 Simulate data

We use a custom function pedfun to generate an appropriately sized, positive-definite matrix for data simulation, which has been modified from prior work by Thomson et al. (2018). This function is also utilized in the simulation code provided on the Github page. We supply basic demographic settings and generate \(\boldsymbol{\mathrm{A}}\), and we increase the sample size to N=300 to aid parameter estimate.

#custom function

library(MCMCglmm)

library(Matrix)

pedfun <- function(popmin, popmax, ngenerations,

epm, nonb, nids, I, missing=FALSE){

# get list of individuals and their generations

gener<-1:ngenerations

genern <- rep(1:ngenerations, times = nids)

ID <- 1:sum(nids)

# runs on generation-by-generation basis

for(i in 1:ngenerations){

id<-ID[which(genern==i)]

dam<-rep(NA, nids[i])

sire<-rep(NA, nids[i])

# randomly allocates sex (0 = male, 1 = female)

sex<-sample(c(0,1), length(id), replace=TRUE)

# for first generation, no dams or sires are known

# so remain NA

if(i==1){

# combine into single data frame

pedigree<-data.frame(id=id, dam=dam, sire=sire,

generation=i, sex=sex)

}

else if(i>1){

# for all generations after first

# list of all possible dams and sires

# from previous generation

pdams<-pedigree$id[which(pedigree$generation==(i-1) &

pedigree$sex==1)]

psires<-pedigree$id[which(pedigree$generation==(i-1) &

pedigree$sex==0)]

# determine number of pairs

# depending on how many males and females

# and the proportion of the population that is non-breeding

npairs<-min(length(pdams), length(psires)) -

round(min(length(pdams), length(psires))*nonb)

# selects breeding males and females

pdams<-pedigree$id[which(pedigree$generation==(i-1) &

pedigree$sex==1)]

psires<-pedigree$id[which(pedigree$generation==(i-1) &

pedigree$sex==0)]

if(length(pdams)<npairs | length(psires)<npairs){

npairs<-min(length(pdams), length(psires))

}

# selects pairs from possible dams and sires

pairs<-data.frame(dam=sample(pdams, npairs, replace=FALSE),

sire=sample(psires, npairs, replace=FALSE))

# gives each offspring their parental pair

pairid<-as.numeric(sample(rownames(pairs),

length(id), replace=TRUE))

# gives each offspring their sex

sex<-sample(c(0,1), length(id), replace=TRUE)

# put into dataframe format

addped<-data.frame(id=id,

dam=pairs$dam[pairid],

sire=pairs$sire[pairid],

generation=i,

sex=sex)

# deals with extra-pair mating (if included)

if(!is.null(epm)){

# for each individual, sample if they are extra pair

# if 0 not extra pair

# if 1 sire resampled from breeding population

# if 2 dam resampled

ext<-sample(c(0,1,2), nrow(addped),

replace=TRUE,

prob = c(1-epm, epm/2, epm/2))

for(j in 1:nrow(addped)){

if(ext[j]>0){

if(ext[j]==1){

addped$sire[j]<-sample(psires,1,replace=TRUE)

}else if (ext[j]==2){

addped$dam[j]<-sample(pdams,1,replace=TRUE)

}

}

}

}

# add new generation to the whole pedigree

pedigree<-rbind(pedigree, addped)

}

}

ped <- pedigree

# make id's non-numeric

ped$id<-paste("ID",ped$id, sep="")

ped$dam[which(!is.na(ped$dam))]<-paste("ID",ped$dam[which(!is.na(ped$dam))], sep="")

ped$sire[which(!is.na(ped$sire))]<-paste("ID",ped$sire[which(!is.na(ped$sire))], sep="")

ped$id<-as.character(ped$id)

ped$dam<-as.character(ped$dam)

ped$sire<-as.character(ped$sire)

IDs <- sample(ped[ped$generation==ngenerations, "id"], I, replace=FALSE)

ped <- prunePed(ped, keep = IDs, make.base=TRUE)

inv.phylo <- inverseA(ped[,c("id","dam","sire")])

A <- solve(inv.phylo$Ainv)

A <- cov2cor(A)

A = (A + t(A))/2 # Not always symmetric after inversion

A <- as.matrix(A)

rownames(A) <- rownames(inv.phylo$Ainv)

colnames(A) <- rownames(inv.phylo$Ainv)

#subset to final generation

A_sub<-A[IDs,IDs]

A_mat <- as.matrix(nearPD(A_sub)$mat)

A_mat <- cov2cor(A_mat)

return(A_mat)

}

#population properties

I=300 #total individuals for simulation

popmin=400

popmax=600

ngenerations = 10

nids<-sample(popmin:popmax, ngenerations, replace=TRUE) #N / generation

epm = sample(seq(0.15, 0.25,by=0.05),1) #extra-pair mating

nonb = sample(seq(0.4,0.6,by=0.05),1) #proportion of non-breeding / generation

#relatedness matrix

A_mat <- pedfun(popmin=popmin, popmax=popmax, ngenerations=ngenerations,

epm=epm, nonb=nonb, nids=nids, I=I, missing=FALSE)We can now simulate a new dataset using the code from above, partitioning the distinct additive genetic and permanent environmental trait values. We include a third measurement per individual to enhance estimation of the individual-level RN intercepts and slopes.

library(mvtnorm)

N = 900 #total observations (3x/individual)

I = 300 #total individuals

intercept = 1 #global intercept

beta1 = 0.3 #fixed effect regression coefficient

SD_intercept = 0.3 #standard deviation of random intercepts

SD_slope = 0.3

SD_residual = 1

r_G = 0.5 #genetic correlation of random intercepts and slopes

r_E = -0.5 #environmental correlation

V_G = 0.3 #genetic variance of REs

V_E = 0.3 #genetic variance of REs

#Random effect correlations

G_cor <- matrix(c(1,r_G,r_G,1), nrow=2, ncol=2) #mu_A, beta_A

G_sd <- c(sqrt(V_G),sqrt(V_G)) #G effect sds

G_cov <- diag(G_sd) %*% G_cor %*% diag(G_sd)

E_cor <- matrix(c(1,r_E,r_E,1), nrow=2, ncol=2) #mu_E, beta_E

E_sd <- c(sqrt(V_E),sqrt(V_E)) #E effect sds

E_cov <- diag(E_sd) %*% E_cor %*% diag(E_sd)

#matrices

G_block <- G_cov %x% A_mat

E_block <- E_cov %x% diag(1,I)

#generate correlated REs

Gvalues <- rmvnorm(1, mean=rep(0,I*2), sigma=G_block)

G_val = data.frame(matrix(Gvalues, nrow=I, ncol=2))

cor(G_val)## X1 X2

## X1 1.0000000 0.5337091

## X2 0.5337091 1.0000000Evalues <- rmvnorm(1, mean=rep(0,I*2), sigma=E_block)

E_val = data.frame(matrix(Evalues, nrow=I, ncol=2))

cor(E_val)## X1 X2

## X1 1.000000 -0.548147

## X2 -0.548147 1.000000#combine

re_P = cbind(G_val,E_val)

colnames(re_P) = c("mu_A", "beta_A", "mu_E", "beta_E")

#individual-level index

id = rep(seq(1, I), each = N/I) #i.e. two observations per individual

#simulate fixed effect covariate

x = rnorm(N,0,1)

#individual phenotypic REs

mu = re_P$mu_A + re_P$mu_E

beta = re_P$beta_A + re_P$beta_E

#residual effects

epsilon = rnorm(N, 0, SD_residual )

#measured response

z = intercept + mu[id] + (beta1 + beta[id])*x + epsilon

#combine into list for Stan

stan_data = list(z = z, x = x, id = id, N = N, I = I, A = A_mat)1.5.2 Kronecker products

We now need to edit the Stan file to partition the genetic and environmental values. Unfortunately, there are no in-built Stan functions for efficiently computing Kronecker products \(\otimes\). This could be overcome by manually specifying the Kronecker product function in the optional functions block of the model. However, Kronecker products can be incredibly costly to compute, particularly for large matrices. It’s thus desirable to find another alternative but mathematically equivalent parametrization to return random effects appropriately scaled by \(\boldsymbol{\mathrm{G}} \otimes \boldsymbol{\mathrm{A}}\) without directly computing this term, as we did above with the Cholesky decomposition and non-centered random effects.

Fortunately, this can be easily accomplished by exploiting the properties of the matrix normal distribution, which generalizes the multivariate normal distribution to random variables described by matrices (Gupta and Nagar 2018). In particular, the matrix normal distribution for some n x p matrix \(\boldsymbol{\mathrm{Z}}\) of p phenotypes is given by

\[ \boldsymbol{\mathrm{Z}} \sim \mathrm{Matrix\ Normal_{n \ x \ p}}(\boldsymbol{\mathrm{M}}, \boldsymbol{\mathrm{U}}, \boldsymbol{\mathrm{V}}) \]

where \(\boldsymbol{\mathrm{M}}\) is a matrix of expected values and \(\boldsymbol{\mathrm{U}}\) and \(\boldsymbol{\mathrm{V}}\) are scaling matrices describing the among-row and among-column (co)variance respectively. This lesser known distribution generalizes from the multivariate normal distribution such that any matrix \(\boldsymbol{\mathrm{Z}}\) will be matrix normally distributed if and only if

\[ \mathrm{vec}(\boldsymbol{\mathrm{Z}}) \sim \mathrm{MVNormal_{np}}(\mathrm{vec}(\boldsymbol{\mathrm{M}}), \boldsymbol{\mathrm{V}} \otimes \boldsymbol{\mathrm{U}} ) \]

where \(\mathrm{vec}()\) is the vector operator, as used above in the mod1.stan file. Given that we are interested in generating random effects with covariance \(\boldsymbol{\mathrm{G}} \otimes \boldsymbol{\mathrm{A}}\), direct computation of the Kronecker product can be avoided by instead sampling the random effects from a matrix normal distribution with the appropriate scaling matrices, i.e. for the for the I x 2 matrix of additive genetic intercepts and slope deviations for I individuals

\[ \begin{bmatrix} \boldsymbol{\mu_{\mathrm{A}}} & \boldsymbol{\beta_{\mathrm{A}}} \end{bmatrix} \sim \mathrm{Matrix\ Normal_{I \ x \ 2}}(\boldsymbol{\mathrm{0}}, \boldsymbol{\mathrm{A}}, \boldsymbol{\mathrm{G}}) \]

We can use the non-centered parameterization described above for the multivariate normal distribution to also more efficiently sample from this matrix normal distribution. In particular, a matrix \(\boldsymbol{\mathrm{Z_{_{I \ x \ 2}}}}\) can be defined for I individual standard normal deviations on each of 2 random effects, which are distributed such that

\[\boldsymbol{\mathrm{Z_{std}}} \sim \mathrm{Matrix\ Normal_{I \ x \ 2}}(\boldsymbol{\mathrm{0}}, \boldsymbol{\mathrm{I}}, \boldsymbol{\mathrm{I}}) \]

The desired matrix of appropriately scaled, zero-centered random effects can then be defined such that

\[\begin{bmatrix} \boldsymbol{\mu_{\mathrm{A}}} & \boldsymbol{\beta_{\mathrm{A}}} \end{bmatrix} = \boldsymbol{0}+\boldsymbol{\mathrm{L_A}} \boldsymbol{\mathrm{Z_{std}}} \boldsymbol{\mathrm{L_G}}^{\mathrm{T}}\] where \[\begin{bmatrix} \boldsymbol{\mu_{\mathrm{A}}} & \boldsymbol{\beta_{\mathrm{A}}} \end{bmatrix} \sim \mathrm{Matrix\ Normal_{I \ x \ 2}}(\boldsymbol{0}, \boldsymbol{\mathrm{L_A}} \boldsymbol{\mathrm{L_A}}^{\mathrm{T}}, \boldsymbol{\mathrm{L_G}} \boldsymbol{\mathrm{L_G}}^{\mathrm{T}} )\]

As explained above, \(\boldsymbol{\mathrm{L_A}}\) is the lower triangular Cholesky decomposition of the \(\boldsymbol{\mathrm{A}}\) matrix, while \(\boldsymbol{\mathrm{L_G}}^{\mathrm{T}}\) is the transpose of the lower triangular Cholesky decomposition of the \(\boldsymbol{\mathrm{G}}\) covariance matrix. This sampling property of the matrix normal distribution therefore facilitates sampling from

\[ \mathrm{vec}( \begin{bmatrix} \boldsymbol{\mu_{\mathrm{A}}} & \boldsymbol{\beta_{\mathrm{A}}} \end{bmatrix} ) \sim \mathrm{MVNormal_{np}}(\mathrm{vec}(\boldsymbol{\mathrm{0}}), \boldsymbol{\mathrm{G}} \otimes \boldsymbol{\mathrm{A}} )\]

through the multiplication of the \(\boldsymbol{\mathrm{Z_{std}}}\), \(\boldsymbol{\mathrm{L_A}}\), and \(\boldsymbol{\mathrm{L_G}}^{\mathrm{T}}\) matrices.

This useful sampling property is straightforward to implement in Stan with appropriate data and can be used to account for any form of random effect covariation among individuals, which may extend beyond \(\boldsymbol{\mathrm{A}}\) alone. Thomson et al. (2018) provide an extensive review of various additional sources of autocorrelation that should be considered in quantitative genetic analyses.

Note that this matrix normal approach is key to efficient estimation of the SAM as well, as demonstrated in the subsequent tutorials. Here we review the relevant code in Stan to highlight how any Kronecker product could be implemented more generally. The code of mod1.stan can be modified accordingly, so that the basic linear mixed-effects model for phenotypic analysis becomes a linear animal model for quantitative genetic analysis. The relatedness matrix \(\boldsymbol{{\mathrm{A}}}\) is now declared in the data block, while the lower triangle Cholesky decomposition matrix \(\boldsymbol{\mathrm{L_A}}\) is generated in the transformed data block.

data {

int<lower=0> N;

int<lower=0> I;

int<lower=0> id[N];

vector[N] x;

vector[N] z;

matrix[I,I] A; //new relatedness matrix

}

transformed data{

matrix[I,I] LA = cholesky_decompose(A); //lower triangle relatedness matrix

}New parameters are also declared for the separate genetic (G) and permanent environmental (E) effects.

parameters {

//fixed effects

real mu_0;

real beta_1;

//random effects

cholesky_factor_corr[2] LGcor; //LC genetic correlation matrix

cholesky_factor_corr[2] LEcor; //LC permanent environmental correlation matrix

vector<lower=0>[2] sd_G; //SD of genetic effects

vector<lower=0>[2] sd_E; //SD of environmental effects

real<lower=0> sd_R;

matrix[I,2] std_G; //matrix of standard normals for G effects

matrix[I,2] std_E; //matrix of standard normals for E effects

}The appropriately scaled random deviations can then be specified in the transformed parameters block. The matrix normal parametrization, i.e. \(\left[ \boldsymbol{\mu_A}, \boldsymbol{\beta_A}\right] =0+\mathrm{L_A}\mathrm{Z_{std_G}}(\mathrm{G_{sd}}\mathrm{L}_{G_{cor}})^{\mathrm{T}}\) where \(\mathrm{G_{sd}}\mathrm{L}_{G_{cor}}=\mathrm{L}_{G_{}}\) is the lower Cholesky covariance matrix, is required for the additive genetic random effects, while the simpler non-centered approach may instead be used for the permanent environmental effects that are independently distributed among individuals. The ' function can again be used to return the transpose of the Cholesky decomposed covariance matrices in Stan.

transformed parameters {

matrix[I,2] re_G = LA * std_G * diag_pre_multiply(sd_G,LGcor)' ; //matrix normal

matrix[I,2] re_E = std_E * diag_pre_multiply(sd_E,LEcor)'; //non-centered

vector[I] mu = col(re_G, 1) + col(re_E, 1); //P = G + E

vector[I] beta = col(re_G, 2) + col(re_E, 2); //P = G + E

}With the addition of new priors in the model block

to_vector(std_devG) ~ std_normal(); //standard normal deviates

to_vector(std_devE) ~ std_normal();

LGcor ~ lkj_corr_cholesky(2);

LEcor ~ lkj_corr_cholesky(2);

to_vector(sd_G) ~ cauchy(0,1);

to_vector(sd_E) ~ cauchy(0,1);the model will be well defined and equivalent to the simpler formal model defined with Kronecker products of covariance matrices. Note that the permanent environmental effects are defined as they were for the purely phenotypic effects above, without consideration of the Kronecker product \(\boldsymbol{\mathrm{E}}\otimes\boldsymbol{\mathrm{I}}\). This product indicates that individuals’ trait values are independent and identically distributed, so that ignoring the Kronecker product in Stan with re_E = std_E * diag_pre_multiply(sd_E,LEcor)' is equivalent to specifying the matrix normal parameterization with additional Cholesky identity matrix \(\boldsymbol{\mathrm{L_{I}}}\), i.e. re_E = LI * std_E * diag_pre_multiply(sd_E,LE_cor)'.

1.5.3 Identifying genetic effects

This matrix normal approach makes the animal model computationally efficient, but a more fundamental issue remains for identifying the scales of the distinct \(\boldsymbol{\mathrm{G}}\) and \(\boldsymbol{\mathrm{E}}\) effects during model estimation. Given that \(\boldsymbol{\mathrm{P}}=\boldsymbol{\mathrm{G}} + \boldsymbol{\mathrm{E}}\) under the assumption of independent additive effects, it can be difficult to uniquely identify the scale of the distinct genetic and environmental trait values, as any increase/decrease in genetic trait values can be compensated by an equivalent decrease/increase in the environmental trait value to achieve equivalent phenotypic values. In principle, this issue is addressed by the fixed information in \(\boldsymbol{\mathrm{A}}\) that is provided to the model prior to estimation. In reality, however, relatedness matrices in the wild are often quite sparse, with most elements at or near 0. As a consequence, when a single individual-level parameter is expressed as the sum of two distinct parameters, as differentiated by the scaling of \(\boldsymbol{\mathrm{A}}\) and \(\boldsymbol{\mathrm{I}}\), it can be challenging to identify the proportion of variance attributable to each effect. Note that in the simplest case of completely unrelated individuals, i.e. \(\boldsymbol{\mathrm{A}} = \boldsymbol{\mathrm{I}}\), genetic and environmental effects are completely confounded and cannot be uniquely identified without introducing further assumptions, as any combination of genetic and environmental values summing to the same value will fit the data equally well.

Fortunately, in spite of the empirical reality of sparse relatedness matrices, it is possible to parameterize an animal model in Stan so that even weakly identified genetic effects can be disentangled from environmental effects, using whatever information is provided by the fixed relatedness matrix and empirical data. This is accomplished by re-expressing the scale of the \(\boldsymbol{\mathrm{G}}\) and \(\boldsymbol{\mathrm{E}}\) effects not as independent parameters, but rather as dependent variances derived from their proportion of a common phenotypic variance parameter. In other words, the model only has to identify the scale of the total phenotypic trait values rather than attempting to identify two independent but potentially confounded random effect variances, i.e.

\[\mathrm{Var}(\boldsymbol{\mu_\mathrm{ }}) = \frac { \mathrm{Var}(\boldsymbol{\mu_{\mathrm{A}}}) }{\mathrm{Var}(\boldsymbol{\mu_{\mathrm{P}}})}\mathrm{Var}(\boldsymbol{\mu_\mathrm{ }}) + \frac { \mathrm{Var}(\boldsymbol{\mu_{\mathrm{E}}}) }{\mathrm{Var}(\boldsymbol{\mu_{\mathrm{P}}})}\mathrm{Var}(\boldsymbol{\mu_\mathrm{ }})\]

\[\mathrm{Var}(\boldsymbol{\beta_\mathrm{ }}) = \frac { \mathrm{Var}(\boldsymbol{\beta_{\mathrm{A}}}) }{\mathrm{Var}(\boldsymbol{\beta_{\mathrm{ }}})}\mathrm{Var}(\boldsymbol{\beta_\mathrm{ }}) + \frac { \mathrm{Var}(\boldsymbol{\beta_{\mathrm{E}}}) }{\mathrm{Var}(\boldsymbol{\beta_{\mathrm{P}}})}\mathrm{Var}(\boldsymbol{\beta_\mathrm{ }})\] The additive genetic proportions can be conceptualized as reaction norm heritabilities for the intercept and slope parameters

\[h_{\mu}^{2} =\frac { \mathrm{Var}(\boldsymbol{\mu_{\mathrm{A}}}) }{\mathrm{Var}(\boldsymbol{\mu_{\mathrm{ }}})}\] \[h_{\beta}^{2}=\frac { \mathrm{Var}(\boldsymbol{\beta_{\mathrm{A}}}) }{\mathrm{Var}(\boldsymbol{\beta_{\mathrm{ }}})}\]

Given that there are only two individual-level random effects, the proportion of variance attributable to environmental effects is necessarily \(1-h_{\mu}^{2}\) and \(1-h_{\beta}^{2}\) for intercepts and slopes respectively. This alternative parametrization is again mathematically equivalent to the previous model, but it is much easier for Stan to estimate appropriately.

To implement this trick, we respecify the model parameters, removing the distinct genetic and environmental SDs and replacing them with common phenotypic SD scale parameters and reaction norm heritability parameters, which can subsequently be used to scale the distinct genetic and environmental standard normal deviates and correlation matrices in the transformed parameters block. This final model can thus be written as

write("

data {

int<lower=0> N;

int<lower=0> I;

int<lower=0> id[N];

vector[N] x;

vector[N] z;

matrix[I,I] A; //new relatedness matrix

}

transformed data{

matrix[I,I] LA = cholesky_decompose(A); //lower triangle relatedness matrix

}

parameters {

//fixed effects

real mu_0;

real beta_1;

//random effects

cholesky_factor_corr[2] LGcor; //additive genetic cor matrix

cholesky_factor_corr[2] LEcor; //permanent environmental cor matrix

vector<lower=0>[2] sd_P; //total phenotypic SD (removed distinct G and E SDs)

real<lower=0> sd_R;

matrix[I,2] std_G; //matrix of standard normals for G effects

matrix[I,2] std_E; //matrix of standard normals for E effects

//RN heritability (proportion between 0 and 1)

vector<lower=0,upper=1>[2] RN_h2;

}

transformed parameters {

vector<lower=0>[2] sd_G; //SDs of G effects

vector<lower=0>[2] sd_E; //SDs of E effects

matrix[I,2] re_G; //scaled G random effects

matrix[I,2] re_E; //scaled E random effects

vector[I] mu; //phenotypic individual intercepts

vector[I] beta; //phenotypic individual slopes

//SDs of genetic effects, sqrt(phenotypic variance * h2)

sd_G[1] = sd_P[1] * sqrt(RN_h2[1]); //genetic SD for ind intercepts

sd_G[2] = sd_P[2] * sqrt(RN_h2[2]); //genetic SD for ind slopes

//SDs of environmental effects, sqrt(phenotypic variance * [1-h2])

sd_E[1] = sd_P[1] * sqrt(1 - RN_h2[1]); //environment SD for ind intercepts

sd_E[2] = sd_P[2] * sqrt(1 - RN_h2[2]); //environment SD for ind slopes

//matrix normal parameterization

re_G = LA * std_G * diag_pre_multiply(sd_G, LGcor)' ;

//non-centered parameterization

re_E = std_E * diag_pre_multiply(sd_E, LEcor)' ;

//separate intercepts and slopes

mu = col(re_G, 1) + col(re_E, 1); //P = G + E

beta = col(re_G, 2) + col(re_E, 2); //P = G + E

}

model {

//model likelihood

z ~ normal(mu_0 + mu[id] + (beta_1 + beta[id]).*x, sd_R);

//priors

//fixed effects

mu_0 ~ normal(0,1);

beta_1 ~ normal(0,1);

//random effects

to_vector(std_G) ~ std_normal(); //genetic std normal deviates

to_vector(std_E) ~ std_normal(); //environmental std normal deviates

LGcor ~ lkj_corr_cholesky(2); //genetic correlations

LEcor ~ lkj_corr_cholesky(2); //environmental correlations

to_vector(sd_P) ~ cauchy(0,1); //only phenotypic scale

sd_R ~ cauchy(0,1);

//reaction norm heritability

to_vector(RN_h2) ~ beta(1.2,1.2);

}

generated quantities {

corr_matrix[2] Gcor = LGcor * LGcor'; //genetic cor

corr_matrix[2] Ecor = LEcor * LEcor'; //environmental cor

matrix[2,2] Gcov = diag_matrix(sd_G)*Gcor*diag_matrix(sd_G); //genetic cov

matrix[2,2] Ecov = diag_matrix(sd_E)*Ecor*diag_matrix(sd_E); //environmental cov

matrix[2,2] Pcov = Gcov + Ecov; //phenotypic covariance (assuming independent effects)

matrix[2,2] Pcor = inverse(diag_matrix(sd_P))*Pcov*inverse(diag_matrix(sd_P)); //phenotypic cor

//variances

vector<lower=0>[2] V_P = sd_P .* sd_P;

vector<lower=0>[2] V_G = sd_G .* sd_G;

vector<lower=0>[2] V_E = sd_E .* sd_E;

real V_R = sd_R * sd_R;

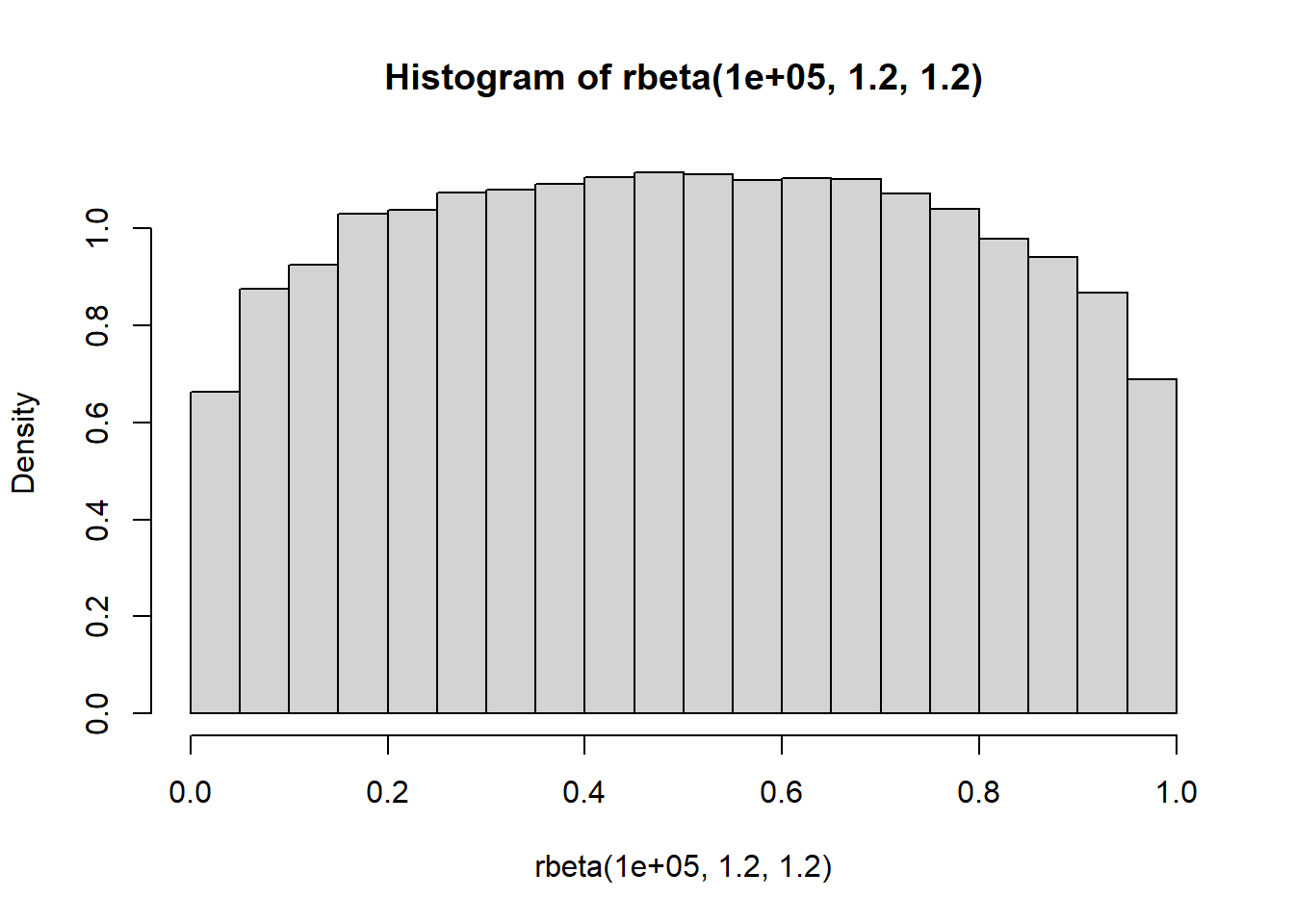

}", "mod1_QG.stan")Note that because we specify phenotypic SDs sd_P, the genetic SDs sd_G are calculated as \(\mathrm{sqrt} ({\mathrm{Var}(\boldsymbol{\mu_{\mathrm{}}})}h_{\mu}^{2})= {\mathrm{SD}(\boldsymbol{\mu_{\mathrm{}}})}\mathrm{sqrt}(h_{\mu}^{2})\) and \(\mathrm{sqrt} ({\mathrm{Var}(\boldsymbol{\beta_{\mathrm{}}})}h_{\beta}^{2})= {\mathrm{SD}(\boldsymbol{\beta_{\mathrm{}}})}\mathrm{sqrt}(h_{\beta}^{2})\), with the same approach taken for the proportion of environmental effects \(1- h_{\mu}^{2}\) and \(1-h_{\beta}^{2}\). A weakly regularizing \(\mathrm{Beta}(1.2,1.2)\) prior is placed on the reaction norm heritability parameters, which are constrained between 0 and 1. This and any other prior can be easily visualized in R by randomly sampling from the relevant distribution.

hist( rbeta(1e5, 1.2, 1.2), prob = TRUE )

This prior is therefore relatively flat and uninformative over the range of plausible values, but provides very weak regularization by giving lower relative probability at the extreme ends approaching 0 (no genetic effect) and 1 (complete genetic effect). With more than two individual-level random effects, such as when specifying multiple matrices of individual autocorrelation (Thomson et al. 2018), SDs and variances can instead be parameterized as scaled simplexes.

We can now estimate our animal model in Stan.

library(rstan)

mod1_QG = stan_model("mod1_QG.stan")## Warning in system(paste(CXX, ARGS), ignore.stdout = TRUE, ignore.stderr = TRUE): 'C:/rtools40/usr/mingw_/bin/g++' not

## found## recompiling to avoid crashing R sessionstan_resultsQG <- sampling(mod1_QG, data=stan_data, init = 0, warmup=1500, iter = 4500,

chains=4, cores=4, control=list(adapt_delta=0.90) )

#extracts posterior estimates

post = extract(stan_resultsQG)Let’s see how well we recovered the genetic and environmental (co)variances of the reaction norm parameters. Due to random sampling and our relatively small sample size, we should anticipate noisy estimates.

#RN intercepts [1] and slopes [2]

apply(post$V_P, 2, median) #phenotypic variance## [1] 0.7718333 0.4988361apply(post$V_G, 2, median) #genetic variance## [1] 0.4356050 0.2426607apply(post$V_E, 2, median) #environmental variance## [1] 0.3276453 0.2469396#RN intercept and slope correlation

median(post$Pcor[,1,2]) #phenotypic corr## [1] -0.00810428median(post$Gcor[,1,2]) #genetic corr## [1] 0.3912837median(post$Ecor[,1,2]) #environmental corr## [1] -0.4630253